- Dpc Latency Checker

- Audio Latency Checker

- Latency Checker Linux

- Internet Speed Test

- Latency Checker Download

- Latency Checker Mac

DPC Latency Checker is a tiny and portable application that monitors computer activity and verifies if it is able to handle real-time streaming of audio and video data without interruptions.

Contributors

Vish Viswanathan, Karthik Kumar, Thomas Willhalm, Patrick Lu, Blazej Filipiak, Sri Sakthivelu

Introduction

- Use Latency checker to find out which Stratu.ms location is best for you. Login Register Help center Latency checker. Enter the pool address on which you want to mine and run a quick latency check to find out which Stratu.ms location is.

- AWS latency test. Latency median (ms) Region code. Region description. Latency (ms) us-east-1.

- Latency is the time it takes a piece of data to travel from your computer to the testing network and back. It is especially important for applications such as gaming, where you want to be as up-to-date as possible.

- The poll rate together with the scan rate determines the maximum input latency. For example, take the example above were the scan interval is 5ms. If our poll rate is 1000Hz, then the keyboard is polled every 1ms and so the maximal input latency is 1+5=6ms.

An important factor in determining application performance is the time required for the application to fetch data from the processor’s cache hierarchy and from the memory subsystem. In a multi-socket system where Non-Uniform Memory Access (NUMA) is enabled, local memory latencies and cross-socket memory latencies will vary significantly. Besides latency, bandwidth (b/w) also plays a big role in determining performance. So, measuring these latencies and b/w is important to establish a baseline for the system under test, and for performance analysis.

Intel® Memory Latency Checker (Intel® MLC) is a tool used to measure memory latencies and b/w, and how they change with increasing load on the system. It also provides several options for more fine-grained investigation where b/w and latencies from a specific set of cores to caches or memory can be measured as well.

Dpc Latency Checker

Installation

Intel® MLC supports both Linux and Windows.

Linux

- Copy the mlc binary to any directory on your system

- Intel® MLC dynamically links to GNU C library (glibc/lpthread) and this library must be present on the system

- Root privileges are required to run this tool as the tool modifies the H/W prefetch control MSR to enable/disable prefetchers for latency and b/w measurements. Refer readme documentation on running without root privileges

- MSR driver (not part of the install package) should be loaded. This can typically be done with 'modprobe msr' command if it is not already included.

Windows

- Copy mlc.exe and mlcdrv.sys driver to the same directory. The mlcdrv.sys driver is used to modify the h/w prefetcher settings.

Previous releases of MLC s/w provided two sets of binaries (mlc and mlc_avx512). mlc_avx512 was compiled with newer tool chain to support AVX512 instructions while mlc binary supported SSE2 and AVX2 instructions. With MLC v3.7 release onwards, only one binary is provided which supports SSE2, AVX2 and AVX512 instructions. By default AVX512 instructions won’t be used whether the processor supports it or not unless -Z argument is added explicitly to the command line.

HW Prefetcher Control

It is challenging to accurately measure memory latencies on modern Intel processors as they have sophisticated h/w prefetchers. Intel® MLC automatically disables these prefetchers while measuring the latencies and restores them to their previous state on completion. The prefetcher control is exposed through MSR (Disclosure of Hardware Prefetcher Control on Some Intel® Processors) and MSR access requires root level permission. So, Intel® MLC needs to be run as ‘root’ on Linux. On Windows, we have provided a signed driver that is used for this MSR access. If Intel® MLC can’t be run with root permissions, please consult the readme.pdf that can be found in the download package.

What Does the Tool Measure

When the tool is launched without any argument, it automatically identifies the system topology and measures the following four types of information. A screen shot is shown for each.

1. A matrix of idle memory latencies for requests originating from each of the sockets and addressed to each of the available sockets

2. Peak memory b/w measured (assuming all accesses are to local memory) for requests with varying amounts of reads and writes

3. A matrix of memory b/w values for requests originating from each of the sockets and addressed to each of the available sockets

4. Latencies at different b/w points

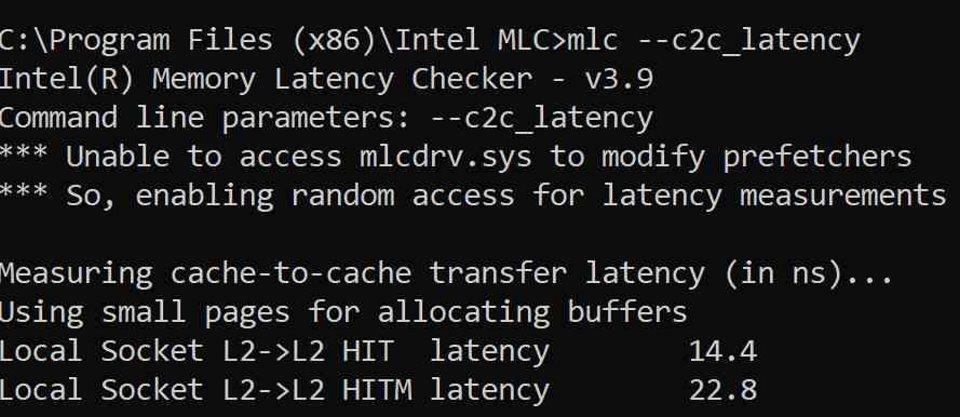

It also measures cache-to-cache data transfer latencies.

Intel® MLC also provides command line arguments for fine grained control over latencies and b/w that are measured.

Here are some of the things that are possible with command line arguments:

- Measure latencies for requests addressed to a specific memory controller from a specific core

- Measure cache latencies

- Measure b/w from a subset of the cores/sockets

- Measure b/w for different read/write ratios

- Measure latencies for random address patterns instead of sequential

- Change stride size for latency measurements

- Measure cache-to-cache data transfer latencies

Audio Latency Checker

How Does It Work

One of the main features of Intel® MLC is measuring how latency changes as b/w demand increases. To facilitate this, it creates several threads where the number of threads matches the number of logical CPUs minus 1. These threads are used to generate the load (henceforth, these threads will be referred to as load-generation threads). The primary purpose of the load-generation threads is to generate as many memory references as possible. While the system is loaded like this, the remaining one CPU (that is not being used for load generation) runs a thread that is used to measure the latency. This thread is known as the latency thread and issues dependent reads. Basically, this thread traverses an array of pointers where each pointer is pointing to the next one, thereby creating a dependency in reads. The average time taken for each of these reads provides the latency. Depending on the load generated by the load-generation threads, this latency will vary. Every few seconds the load-generation threads automatically throttle the load generated by injecting delays, thus measuring the latency under various load conditions. Please refer to the readme file in the package that you download for more details.

Command Line Arguments

Launching Intel® MLC without any parameters measures several things as stated earlier. However, with command line arguments, each of the following specific actions can be performed in sequence:

mlc --latency_matrix

prints a matrix of local and cross-socket memory latencies

mlc --bandwidth_matrix

prints a matrix of local and cross-socket memory b/w

mlc --peak_injection_bandwidth

prints peak memory b/w (core generates requests at fastest possible rate) for various read-write ratios with all local accesses

mlc --max_bandwidth

prints maximum memory b/w (by automatically varying load injection rates) for various read-write ratios with all local accesses

mlc --idle_latency

prints the idle memory latency of the platform

mlc --loaded_latency

prints the loaded memory latency of the platform

mlc --c2c_latency

prints the cache-to-cache transfer latencies of the platform

mlc -e

do not modify prefetcher settings

There are more options for each of the commands above. Those are documented in the readme file in more detail and can be downloaded

Change Log

Version 1.0

- Initial release

Version 2.0

- Support for b/w and loaded latencies added

Version 2.1

- Launch 'spinner' threads on remote node for measuring better remote memory b/w

- Automatically disable numa balancing support (if present) to measure accurate remote memory latencies

Version 2.2

- Fixed a bug in topology detection where certain kernels were numbering the cpus differently. In those cases, consecutive cpu numbers were assigned to the same physical core (like cpus 0 and 1 are on physical core 0..)

Version 2.3

- Support for Windows O/S

- Support for single socket (E3 processor line)

- Support for turning off automatic prefetcher control

Version 3.0

- Support for client processors like Haswell and Skylake

- Allocate memory based on NUMA topology. This allows Intel® MLC to measure latencies on all the numa nodes on a processor like Haswell that supports Cluster-on-Die configuration where there are 4 numa nodes on a 2-socket system. We can also measure latencies to NUMA nodes which have only memory resources without any compute resources

- Support for measuring latencies and bandwidth to persistent memory

- Options to use 256-bit and 512-bit loads and stores in generating bandwidth traffic

- Support for measuring cache-to-cache data transfer latencies

- Control several parameters like read/write ratios, size of buffer allocated, numa node to allocate memory etc on a per-thread basis

Version 3.1

- Support for Skylake Server

Version 3.1a

- MLC failing on some guest VMs issue fixed

Version 3.3

- Several fixes for measuring latencies and b/w on Skylake server

Version 3.4

- Added support for multiple numa nodes on a socket on Windows server

- Several enhancements for measuring latencies and b/w on persistent memory

- Changed peak_bandwidth to peak_injection_bandwidth and added --max_bandwidth option to support automatically measure the best possible bandwid

Version 3.5

- Fixed memory leak issues that caused MLC to fail on large systems (8-socket)

- Added option to flush cache lines to persistent memory

- Added option to only partially load/store cache line (loading only 16 bytes instead of entire 64 byte) to get best cache b/w

Version 3.6

- Better persistent memory support for Windows o/s

- Fix for numa nodes with no memory

- Fix for allocating more than 128GB memory per thread in b/w tests

- Ability to specify -r -e in main invocation to deal with scenarios where prefetchers can't be turned off

Version 3.7

- Combined mlc and mlc_avx512 versions into one binary.

- Support for specifying where loaded latency thread can run besides the default cpu#0

- Automatically use random access for latency measurements if h/w prefetchers can't be controlled (due to permissions issue or running within a VM)

- Support for NUMA nodes with no CPU resources

Version 3.8

- Added support for 1GB huge pages

- Fixed several issues with running MLC in VMs

- Improved cpu topology detection

Version 3.9

- Added support data integrity checks. Now, 100% reads can check the data against the expected values and report data corruption

- Fixes to report correct memory b/w if stores are included in the traffic for the next generation Intel Xeon processors

Download

Both Linux and Windows versions of Intel® MLC are included in the download.

One of the worst things to experience with your network is a sudden slowdown. Slow networks can be a disaster if you’re in the middle of an important business process, trying to impress a client, or rushing to complete an urgent task.

High latency can become increasingly problematic as networks grow bigger, as having more connections means more points where delays and issues can occur. These risks become greater as your business connects to cloud servers, uses more applications, or expands to include remote workers and branch offices.

If you’re wondering how to improve latency, I highly recommend understanding and setting up processes for checking and reducing this problem across your network, so when a problem arises, you’re already equipped to handle it.

What is Network Latency?

Network latency is the time it takes for data or a request to go from the source to the destination. Latency in networks is measured in milliseconds. The closer your latency is to zero, the better.

The most common signs of high latency include:

- Your data takes a long time to send, as in an email with a large attachment

- Accessing servers or web-based applications is slow

- Websites do not load

Determining your network latency and improving it so network processes run faster is important for business efficacy, as well as simply making your workday less frustrating.

Best Practices for Monitoring and Improving Network Latency

Before you can improve your network latency, it’s important to first understand how to determine your latency and the different ways you can measure it. By knowing your latency, you can better troubleshoot any problems you’re having to ensure data travels more quickly.

How to Check Network Latency

The first thing you need to do if you think your network is going slowly is to check your current network latency. Using Windows, you can open a command prompt and type tracert followed by the destination you’d like to query, such as cloud.google.com.

How to Measure Network Latency

Once you type in the tracert command, you’ll see a list of all routers on the path to that website address, followed by a time measurement in milliseconds (ms).

Add up all the measurements, and the resulting quantity is the latency between your machine and the website in question. IT administrators or professionals will typically use network monitoring and management tools to get this information automatically.

Latency can either be measured as the Round Trip Time (RTT) or the Time to First Byte (TTFB):

- RTT is defined as the amount of time it takes a packet to get from the client to the server and back.

- TTFB is the amount of time it takes for the server to receive the first byte of data when the client sends a request.

How to Reduce Network Latency

When you are considering how to improve network latency, there are different steps you can take at various points across the network. First, make sure other people on your network aren’t using up all the bandwidth or increasing your latency with lots of downloads or streaming. Then, check application performance to ensure no applications are acting in unexpected ways and putting pressure on the network.

Subnetting can also help reduce latency across your network as you can group together endpoints that communicate most frequently with each other. Additionally, consider using traffic shaping and bandwidth allocation measures to improve latency for the business-critical parts of your network. Finally, you can use a load balancer to help offload traffic to parts of the network with the capacity to handle some additional activity.

How to Troubleshoot Network Latency Issues

If you want to make sure latency issues are on your network, you can try disconnecting computers or network devices and restarting all the hardware. Make sure you also have a network device monitor installed so you can check if any of the devices on your network are specifically causing issues. Be aware, even if you fix a bottleneck somewhere in your network, you might simply be creating another one somewhere else.

If you still have latency problems after thoroughly looking at all your local devices, it’s possible the issues are coming from the destination you’re trying to connect to. Troubleshooting issues across a large network becomes complex when you try to pinpoint an issue manually, and I generally recommend troubleshooting tools and software to help you with this task.

How to Test Network Latency

Testing network latency can be done by using ping, traceroute, or My TraceRoute (MTR) tool. More comprehensive network performance managers can test and check latency alongside their other features.

The importance of measuring and reducing latency cannot be overstated, as maintaining a high-performance and reliable network is a big part of having a successful business. If managed poorly network issues can become a substantial business risk, so using appropriate management protocols and tools is vital for any professional enterprise.

What Tools Help Improve Network Latency?

Using tools to improve network latency is familiar to most network professionals, and there are several different options with network latency measuring features.

A network performance monitoring tool is the most comprehensive kind of tool you can use, as it normally includes features let you address latency and network performance. A tool like SolarWinds® Network Performance Monitor (NPM) also provides functions like network latency testing, network mapping, problem troubleshooting, and general network baselining.

Latency Checker Linux

With network monitoring tools, you can typically set network baseline expectations for latency and then set up alerts when the network latency reaches a certain threshold above this baseline. You can also often set up data comparisons between different metrics, so you can see links between different performance issues, such as application performance or errors also affecting network latency. A network mapping tool can also help you pinpoint where within the network latency the performance issues are occurring, which allows you to troubleshoot problems more quickly.

Internet Speed Test

You can also look at using a dedicated traceroute tool to look at packets and how they move across an IP network, including how many “hops” the packet took, the roundtrip time, best time (in milliseconds), as well as the IP addresses and countries the packet traveled through. This can help you pinpoint the places in the network with high latency and troubleshoot those issues if they’re a part of your network under your control.

Latency Checker Download

While many tools include traceroute capabilities in their suite of features, consider whether you need a full performance monitoring tool or if a traceroute tool is enough for your needs. If you’re looking for a basic option, you could use a free traceroute tool like Traceroute NG to find latency and packet loss occurring on a network. It can also detect path changes and send alerts. For a more robust latency monitoring solution, SolarWinds NPM is designed to identify the source and nature of network and application latency, reliability, and other performance problems.

Latency Checker Mac

All these tools can help you measure network latency across the entire network or between points. By improving your network speed and reducing latency, your business processes will also make leaps and bounds towards efficiency and high performance.